Solving the Game of SET: Part II

Recap - Part I

Be sure to read through Part I for some background on my initial solution. We left off with a really amazing program that can solve a game of set on a static image.

Unfortunately, when I ran the solution on a live video, you can see a few flaws in my solution:

Issues to Address

Here’s what I’ll try and fix in Part II:

- Fix solution jitters in live stream

- Applying the static image solution to a live stream naively results in a ton of jumping around because the solution is not sorted based on location.

- More robust fill detection

- Use the corner of the card as a reference value to determine what zero-fill looks like.

- Apply solution when change is detected

- This one might not be important, but it more accurately reflects how humans perceive the game and recognize change. We’ll try to only apply our solution when the board has changed, not on every single frame. This should also help eliminate solution jitters.

1. Fixing Card Locations

The first step in fixing these jitters are to sort the card array by (x,y) location. Currently the array is left un-sorted (or rather it’s sorted by size of the card) which leaves the final sort pretty random. This doesn’t affect the solution technically, because the game isn’t dependent on card location. On still images, it doesn’t matter but it does affect how the solution gets displayed in a live-feed. Videos are always trickier than still images…

First, we fix the card location like so:

def generate_cards(self):

info = []

for card in self.card_array:

self.card_parameters.append([card.number, card.shape, card.fill, card.color])

info.append([card.x, card.y, card.number, card.shape, card.fill, card.color])

info = np.array(info)

iidx = np.argsort(info[:, 0] + 2*info[:, 1])

self.card_info = info[idx]

This method is pretty arbitrary but it works really well and is very simple.

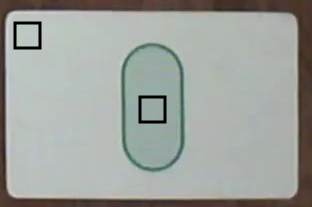

2. More Robust Fill Detection

The next issue I found was my method for determining symbol fill wasn’t as great or robust as I thought. Some of the jumping around in the solution is because my analysis of the cards is jumping around. My original method for fill analysis was to run an edge detector on the fill and if we found edges then we knew it was hash filled. Unfortunately, this doesn’t work well or robustly with low-quality webcam images because the resolution isn’t fine enough to distinguish between the hash lines. I found that my original method of comparing the euclidean distance between a patch at the center of the symbol and a patch in the corner of the card worked much better. As you can tell, the green is particularily tricky because the camera gives things quite a bit of green tinge already.

I’ve found a 8x8 patch works well - here’s a snippet:

def find_fill(self):

self.normalize_patch = self.warp[0:2 * height, 0:2 * width, :]

self.fill_patch = self.symbol_img[h_min:h_max, w_min:w_max]

normal_mean = np.mean(self.normalize_patch, axis=(0,1))

patch_mean = np.mean(self.fill_patch, axis=(0,1))

distance = mse(normal_mean, patch_mean)

sym_edge = cv2.Canny(self.fill_patch, 10, 50)

fill_cnt = (sym_edge > 0).sum()

self.temp = (fill_cnt, np.round(distance, 0))

self.edged = sym_edge

if distance > 100:

self.fill_str = 'Solid'

elif 0 <= distance <= 30:

self.fill_str = 'Empty'

else:

self.fill_str = 'Hash'

3. Robust to Live Video Streams

The final things I did to create a more robust solution is specific to video feeds. We can eliminate a lot of noise in our feed by averaging frames in our feed using cv2.accumlateWeighted().

cv2.accumulateWeighted(next_img, result, .4)

result = cv2.convertScaleAbs(result)

I also check for motion in the frame by subtracting the images in subsequent frames and thresholding the result. If the frames are identical, the result will be a purely black frame. If there are any differences, we will see white pixels. We can check for motion easily just by adding up our white pixels and setting a threshold value. In addition, we’ll maintain a buffer so we need to see motion for n frames in a row. We can use the pop method of python lists to make this easy.

diff = cv2.subtract(result, old_frame)

thresh_diff = threshold_img(bw_filter(diff))

thresh_buffer.append(thresh_diff.sum())

if len(thresh_buffer) < buffer_size:

thresh_buffer.append(thresh_diff)

else:

thresh_buffer.append(thresh_diff)

thresh_buffer.pop(0)

if np.all(np.array(thresh_buffer) < 5e-4):

motion = False

else:

motion = True

if not motion and not scene_processed:

scene = ProcessScene(img)

scene.process()

game = PlayGame(res1, [scene])

game.solve()

scene_res = game.draw_solution()

scene_processed = True

if not motion and scene_processed:

txt = "Card Count: " + str(scene.card_count)

else:

txt = "Motion Detected..."

scene_processed = False

This helps keep the scene from being processed until something changes. It also locks our view in place - so the solution doesn’t jump around.

Here’s an entire deck being played through:

Overall it works really well. The hardest part is translating the camera image to the the cards that I’m supposed to pick up (given that my video is flipped!). It’s not the easiest to watch because the cards just disappear as the screen doesn’t update when there is too much motion. As you can see in the very first set, the algo still has some trouble with hashed cards, I might have to figure out how to make that a bit more robust.

The code can be found here